"Hey Siri, can I trust you?"

A qualitative study on how expectation gaps, privacy concerns, and emotional reactions shape user trust in AI voice assistants.

Full presentation video

Context

With over four billion AI virtual assistants (VAs) deployed worldwide, tools like Siri, Alexa, Google Assistant, and ChatGPT are reshaping how people interact with technology. These systems promise convenience - helping with navigation, music, or quick information retrieval - but concerns about trust, accuracy, and privacy remain.

Past research has shown that users’ mental models (their internal beliefs about how technology works) play a critical role in shaping these concerns. Misaligned expectations can lead to frustration, disengagement, and decreased trust.

This study investigated how adult users’ mental models of Siri influenced their trust, behaviors, and emotional experiences during everyday interactions.

Methodology

Approach: User interviews ~30 minutes each, via WebEx

Participants: 5 young adult professionals (ages 25–35)

Analysis: Thematic coding to identify recurring patterns and themes

Research Question

How do adult users’ mental models of AI virtual voice assistants, specifically Siri, influence their behaviors, concerns, and perceived limitations when using it for everyday information-seeking tasks?

A qualitative analysis through user interviews was used for this study which included five user interviews total, each lasting approximately 30 minutes, guided by 13 questions. All interviews were conducted remotely via virtual video calls using WebEx.

Participants included young adult users between 25-35 years old who were working professionals that used Siri to access everyday information or perform simple tasks. The participants were recruited from my personal LinkedIn network.

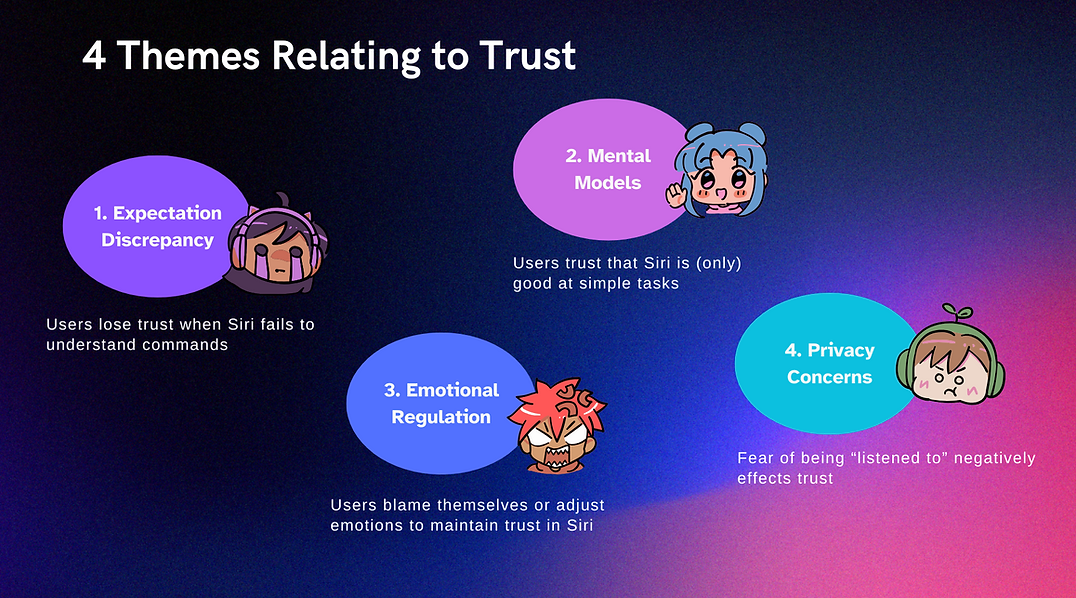

After conducting an analysis, 4 key findings were identified that influenced the overall theme of user trust with Siri:

Key Findings

Expectation Discrepancy

-

Users expected Siri to handle more complex queries but grew frustrated when it failed.

-

Mismatched expectations led to lost trust and manual task completion

Mental Models

-

Participants believed Siri was useful only for simple tasks (timers, quick facts).

-

This constrained perception discouraged experimentation with more advanced use cases

Emotional Regulation

-

Users expressed frustration when Siri didn’t understand them.

-

Many adjusted their tone (speaking slower, louder, or clearer) even while knowing logically it wouldn’t help.

-

This emotional labor became part of the user burden, deteriorating trust over time

Privacy Concerns

-

Users hesitated to ask personal questions, fearing Siri might “always be listening.”

-

This concern reduced trust and often pushed users to other platforms like ChatGPT

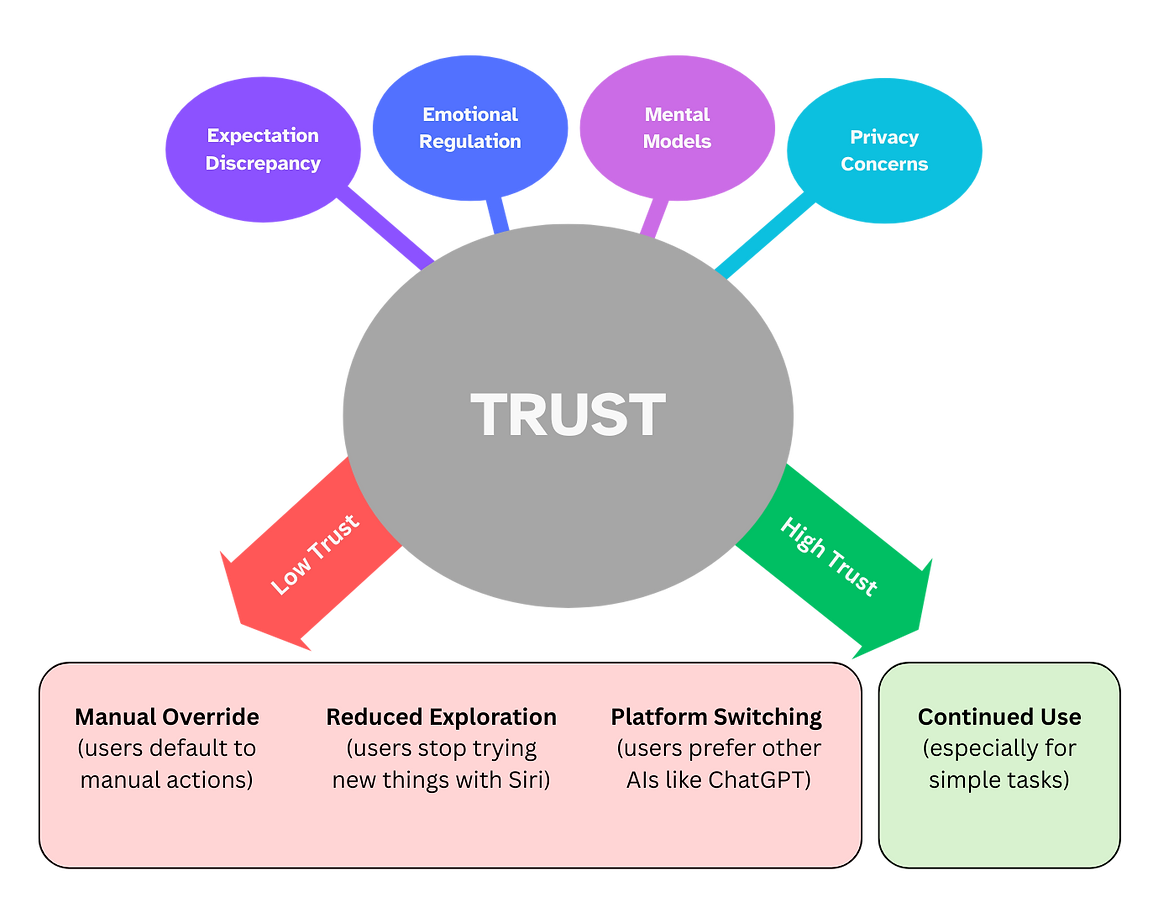

All 4 themes influence the level of trust users have with Siri. If the trust level in Siri is low, users are led to manually look up information or perform tasks themselves. They also reduce experimenting with Siri, and then sometimes stop using Siri altogether and turn to other AI platforms like ChatGPT.

On the other hand, if trust levels are high, people will continue to use Siri, but mostly for simple tasks they KNOW and believe Siri can do.

These behavioral patterns show how user actions both reflect and reinforce their level of trust in Siri. When trust is low, participants fall back on manual workarounds, avoid trying new features, or even switch platforms, which further limits engagement. When trust is higher, use remains constrained to only the simplest tasks.

Design Implications

Because trust is the central factor shaping behavior, the design implications must focus on building and maintaining it—through clearer expectations, incremental feedback, stronger privacy assurances, and support for the emotional side of interaction.

Set clearer expectations:

Transparent onboarding to explain Siri’s strengths and limitations.

1

Improve privacy transparency:

Clearer privacy controls and communication around data use.

3

Build incremental trust:

Offer feedback on why tasks succeed or fail.

2

Support emotional experience:

Design responses that acknowledge user frustration and help de-escalate negative emotions.

4

Conclusion

Overall, users’ mental models shape trust and engagement with Siri. When users expect limited capabilities, they use Siri cautiously, reinforcing distrust after failures.

Additionally, the theme of emotional regulation offers another new insight: users described escalating frustration when Siri failed to understand them, adjusting their tone despite knowing logically it would not help.

This emotional labor suggests an underexplored area of human-VA interaction: managing emotions becomes part of the user burden, further deteriorating trust over time.

To address this gap, designers need to consider not only functional improvements but also the emotional demands placed on users.